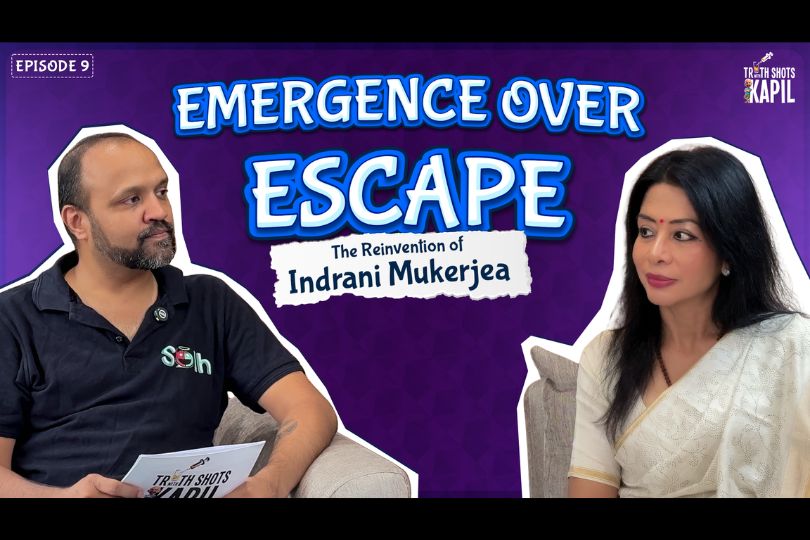

According to George R.R. Martin and other Authors, OpenAI Stole their Works to Train ChatGPT: 'We've Come to Fight

Seventeen authors, including George R.R. Martin, file a class action against OpenAI, alleging identity theft through AI training on their fiction works.on Sep 27, 2023

.jpg)

The authors have filed a class action lawsuit, claiming that using fiction to teach AI is "identity theft on a grand scale."

A group of 17 authors, including Game of Thrones novelist George R.R. Martin, have filed a class action complaint on behalf of fiction writers who allege their work was used to train the generative AI chatbot against ChatGPT-maker OpenAI.

The Authors Guild, an advocacy group, said in a statement that "generative AI threatens to decimate the author profession" and that the lawsuit was filed "because of the profound unfairness and danger of using copyrighted books to develop commercial AI machines without permission or payment."

"This is just the beginning of our fight to protect authors from theft by OpenAI and other generative AI," Authors Guild president Maya Shanbhag Lang stated. "With nearly 14,000 members, the Guild is uniquely positioned to represent authors' rights as the oldest and largest writers' organisation." Our membership is diverse and enthusiastic. Our personnel, which includes a competent legal team, is knowledgeable with copyright law. All of this is to imply that we are not bringing this suit lightly. We've come to fight."

David Baldacci, Mary Bly, Michael Connelly, Sylvia Day, Jonathan Franzen, John Grisham, Elin Hilderbrand, Christina Baker Kline, Maya Shanbhag Lang, Victor LaValle, George R.R. Martin, Jodi Picoult, Douglas Preston, Roxana Robinson, George Saunders, Scott Turow, and Rachel Vail are among the authors whose names appear on the suit.

The complaint, filed this week, accuses OpenAI specifically of utilising "text from books copied from pirate sites" to train GPT 3.5 and GPT 4.

As the name suggests, "large language models" like ChatGPT necessitate a significant amount of training data, and the organisations behind them are not known for being picky about what they scrape off the internet. Rather of attempting to avoid scraping hate speech and other harmful content, OpenAI created a second AI system to filter it out.

According to the complaint, ChatGPT had previously responded to requests to cite portions from copyrighted publications with "a good degree of accuracy," and had only recently begun denying the prompt. According to the lawsuit, a request for a book summary now frequently "contains details not available in reviews and other publicly available material," implying that the book itself stays part of the training data. In a statement to the Patent and Trademark Office [PDF], OpenAI confirmed that copyrighted content appears in its training material, according to the claim.

ChatGPT is also not afraid to try to imitate real authors: I recently asked the free version to write "a short story in the style of George R.R. Martin," and it obliged, beginning, "In the shadowed halls of Castle Blackthorn, a bitter wind howled through the crenellations, carrying with it the promise of winter's relentless grasp." (I like how you used the word "crenellations.")

According to the Authors Guild, a recent attempt was made to "generate volumes 6 and 7 of plaintiff George RR Martin's Game of Thrones series A Song of Ice and Fire" using OpenAI's software. The project's author has withdrawn it from GitHub, but says they're still available if Martin's representatives want to contact them.

The case contains numerous more particular AI mimicking claims pertaining to each author's work, but The Authors Guild's public statement focuses on the big picture, calling the unauthorised use of fiction writing for AI training material "identity theft on a grand scale."

"Great books are generally written by those who spend their careers, and, indeed, their lives, learning and perfecting their crafts," said Authors Guild CEO Mary Rasenberger. "In order to preserve our literature, authors must be able to control whether and how their works are used by generative AI." GPT models and other existing generative AI machines can only produce material that is derivative of what came before it.

They mimic sentence form, voice, storytelling, and context from books and other writings they have consumed. The results are only remixes with no human voice added. Human art cannot be replaced by regurgitated culture."

The authors are not asking for the end of big language model development as a whole, but rather that an author's work should only be used for AI training with consent and pay.

"Authors should have the right to decide when their works are used to 'train' AI," novelist Jonathan Franzen stated. "If they choose to opt in, they should be appropriately compensated."

Hidden Door is one gaming firm exploring this route, with ambitions to licence authors' fictitious worlds and writing styles and utilise them to build online RPG adventures using its own AI technology. The Wizard of Oz, a public domain property, will serve as the inspiration for the company's first game.

"I know this is controversial, but I don't think AI is innately evil," Hidden Door CEO Hilary Mason said earlier this year to PC Gamer. "I think what we're arguing about is who benefits, and we really want to see it benefit the writers, the creators." That's why we're doing things the way we are."

The Authors Guild previously sued Google over their book scanning for Google Book Search, which Google eventually won in a decision that paved the way for libraries to establish digital book lending.

I've reached out to OpenAI for comment on the complaint, specifically the allegation that it utilised pirated copies of novels as GPT training material, and will update this piece if I receive a response.

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

_(1).jpg)

.jpg)

Sorry! No comment found for this post.